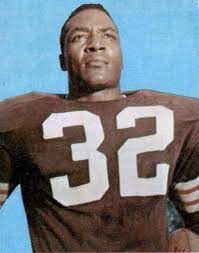

Jim Brown died last week. Although I just saw Brown at the end of his career and at the start of my NFL fandom, he was the best player I ever saw. He is certainly the greatest running back of all-time, even if others have exceeded his yards gained the hard way, on the ground. A multi-sport All-American at Syracuse University, in both football and lacrosse, (and in the HOF for both sports) Brown played for the Cleveland Browns under legendary coach, Paul Brown. According to his New York Times (NYT) obituary, “Brown was voted football’s greatest player of the 20th century by a six-member panel of experts assembled by The Associated Press in 1999. A panel of 85 experts selected by NFL Films in 2010 placed him No. 2 all time behind the wide receiver Jerry Rice of the San Francisco 49ers.” The legendary New York Giants linebacker Sam Huff said of Brown, ““All you can do is grab, hold, hang on and wait for help.”

Brown was equally famous for his life after football where he was an action movie star, most notably (for me) in The Dirty Dozen. More importantly he was a voice of social conscience as well. According to the NYT, “he founded the Negro Industrial and Economic Union (later known as the Black Economic Union) as a vehicle to create jobs. It facilitated loans to Black businessmen in poor areas — what he called Green Power — reflecting his long-held belief that economic self-sufficiency held more promise than mass protests.” He later founded “the Amer-I-Can Foundation to teach basic life skills to gang members and prisoners, mainly in California, and steer them away from violence. The foundation expanded nationally and remains active.”

But I will always remember the highlights of the greatest running back ever; breaking tackles and outrunning all defenders to daylight and the end zone.

The Call For Regulation

Brown’s social advocacy informs today’s post about the coming regulation of AI. Last week, we were all treated to the spectacle (yet again) of another tech entrepreneur testifying before Congress, asking them to do their job which they seem to be incapable of doing any longer—passing legislation. Writing the Harvard Business Review, in an article entitled “Who Is Going to Regulate AI? Blair Levin and Larry Downes noted that “OpenAI chief executive Sam Altman said it was time for regulators to start setting limits on powerful AI systems.” They then quoted from his testimony for the following “As this technology advances we understand that people are anxious about how it could change the way we live. We are too…If this technology goes wrong, it can go quite wrong, [with] significant harm to the world.” Altman agreed with lawmakers that government oversight will be critical to mitigating the risks.

Who Will Regulate AI

There is no shortage of potential government actors who might step in to regulate AI. As the authors note, “First, there’s Congress, where Senate Majority Leader Chuck Schumer is calling for preemptive legislation to establish regulatory “guardrails” on AI products and services. The guardrails focus on user transparency, government reporting, and “aligning these systems with American values and ensuring that AI developers deliver on their promise to create a better world.” The vagueness of this proposal, however, isn’t promising.”

Next is the Biden Administration, which created a White House blueprint for an AI Bill of Rights, last October. Here the authors said, “The blueprint is similarly general, calling for developers to ensure “safe and effective” systems that don’t discriminate or violate expectations of privacy and that explain when a user is engaging with an automated system and offer human “fallbacks” for users who request them.”

Next at the Department of Commerce, the National Telecommunications and Information Administration (NTIA) has begun to explore the “usefulness of audits and certifications for AI systems. The agency has requested comments on dozens of questions about accountability for AI systems, including whether, when, how, and by whom new applications should be assessed, certified, or audited, and what kind of criteria to include in these reviews.”

Federal Trade Commission (FTC) Chair Lina Kahn is looking at AI regulation through an anti-competitive and consumer protection lens “in the direction of the new technology. Kahn speculates that AI could exacerbate existing problems in tech, including “collusion, monopolization, mergers, price discrimination, and unfair methods of competition.” Generative AI, the FTC chair also believes, “risks turbocharging fraud” with its ability to create false but convincing content.” Khan has also express concern of the inherent bias in AI and a discriminatory impact.

Finally, the Department of Commerce is considering creating “a sustainable certification process, or the political clout to get the tech industry to support NTIA’s efforts. Further, as the Department acknowledges, its inquiry is only part of the larger White House effort to create a trusted environment for AI services, an objective that would require previously unseen levels of coordination and cooperation across numerous government silos.”

What Should Compliance Do?

I certainly believe there will be combination of government action, as the authors note in the “legislative, regulatory, or judicial’ sphere will be a “balancing act of maximizing the value of AI while minimizing its potential harm to the economy or society more broadly.” But as is well known, law advances incrementally while technology evolves exponentially. I agree with the authors that compliance professionals “should take their cue from the Department of Commerce’s ongoing initiative, and start to develop nongovernmental regulators, audits, and certification processes that identify and provide market incentives to purchase ethical and trusted AI products and services, making clear which applications are and are not reliable.”